OBJECTIVE

Verify whether two

groups are significantly different.

DESCRIPTION

There are three main

applications of the t-test:

- - One-sample t-test: compare a sample mean with the mean of its population;

- - Two-sample t-test: compare two sample means;

- - Paired t-test: compare two means of the same sample in different situations (i.e. before and after a treatment).[1]

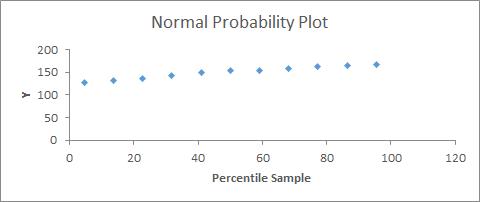

To perform a t-test, it

is necessary to check the normality assumption (see 36.INTRODUCTION TO REGRESSIONS); however, the t-test tolerates deviations

from normality as long as the sample size is large and the two samples have a

similar number of elements. In the case of important normality deviations, we can

either transform the data or use a non-parametric test (see Figure

40 in chapter 41.INTRODUCTION TO HYPOTHESIS TESTING).

An alternative to the

t-test is the z-test; however, besides the normality assumption, it needs a

larger sample size (usually > 30) and the standard deviation of the

population.

Each of the three

kinds of t-tests described above has two variations depending on the kind of

hypothesis to be tested. If the alternative hypothesis is that the two means

are different, then a two-tailed test is necessary. If the hypothesis is that one

mean is higher or lower than the other one, then a one-tailed test is required.

It is also possible to specify in the hypothesis that the difference will be

larger than a certain number (in two-sample and paired t-tests).

After performing the

test, we can reject the null hypothesis (there are no differences) if the

p-value is lower than the alpha (α) chosen (usually 0.05) and if the t-stat value

is not between the negative and the positive t-critical value (see the

template). The critical value of t for a two-tailed test (t-critical two-tail)

is used to calculate the confidence interval that will be at the 1 minus the α

chosen (if we choose 0.05 we will have a 95% confidence interval).

ONE-SAMPLE T-TEST

With this test we compare a sample mean with

the mean of the population. For example, we have a shampoo factory and we know

that each bottle has to be filled with 300 ml of shampoo. To control the

quality of the final product, we take random samples from the production line

and measure the amount of shampoo.

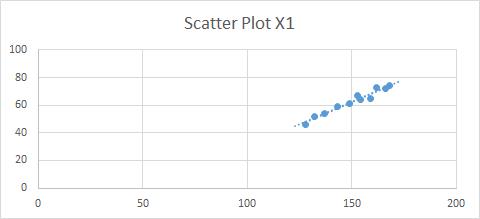

Since we want to stop

and fix the production line if the amount of shampoo is smaller or larger than

the expected quantity (300 ml), we have to run a two-tailed test. Figure

43 shows the input data as well as the calculated

standard deviation and sample mean, which is 295. A confidence level of 0.05 is

chosen. We then calculate the t-critical value and p-value (the formulas can be

checked in the template).

Figure 44:

Results of a One-Sample t-Test

Since the p-value is

lower than the alpha (0.05), we conclude that the difference in the means is

significant and that we should fix our production line. The results also include

the confidence interval of 95%, which means that we are 95% confident that the bottles

are filled with a minimum of 292 ml and a maximum of 298 ml.

TWO-SAMPLE T-TEST

A practical example would be to determine whether

male clients buy more or less than female ones.

First of all, we

should define our hypothesis. In our example our hypothesis is that male and

female clients do not buy the same amount of goods, so we should use a

two-tailed test; that is, we do not infer that one group buys more than the

other one. On the other hand, if we would like to test whether males buy more,

in this case we would use a one-tailed test.

In the Excel

complement “Data Analysis,” we choose the option “t-Test: Two-Sample Assuming

Unequal Variances” by default, since, even if the variances are equal, the

results will not be different, but if we assume equal variances, than we will

have a problem in the results if finally the variances are not equal. We select

the two-sample data and specify our confidence level (alpha, by default 0.05).

Figure 45: Output

of a Two-Sample t-test Assuming Unequal Variances

Since we are testing

the difference, either positive or negative, in the output, we have to use the

two-tailed p-value and two-tailed t-critical value. In this example the

difference is significant, since the p-value is smaller than the chosen alpha

(0.05).

Confidence intervals

are also calculated in the template, concluding that we are 95% confident that women

buy between 8 and 37 more products than men.

PAIRED T-TEST

We want to test two different products on

several potential consumers to decide which one is better by asking participants

to try each one and rate them on a scale from 1 to 10. Since we have decided to

use the same group to test both products, we are going to run a paired two-tailed

t-test. The alpha chosen is 0.05.

Figure 46:

Output of a Paired t-Test

The results of the

example show that there is no significant difference in the rating of the two

products, since the p-value (two-tail) is larger than the alpha (0.05). The

template also contains the confidence

interval of the mean difference, which in this case includes 0 since there is

no significant difference.

TEMPLATE

[1] In some cases it is also possible to use two samples and match each

component on a certain dimension.